Much like many others, I’ve started playing Among Us with my friends. We’ve really enjoyed accusing each other and it has been fun convincing my friends that I’m not the imposter.

What had been bothering me, though, was always having to do the same tasks. Especially the card swiping, which takes such a long time to do in order to get the timing right. This has been the cause of me being accused of faking it, as it sometimes takes me a ridiculously long time to do it.

So, I gave myself a challenge.

How many of the tasks in Among Us can I automate?

The answer can be found at the end of this post.

Getting started with Python

I started with Python with the pyautogui, pyscreeze, keyboard and pygetwindow libraries.

To be able to move around and resize the window without messing with the position of the simulated mouse clicks, I first developed a system as a wrapper around pyautogui, where I can send the relative position inside the Among Us Window and it will automatically calculate the pixel position based on the window position and size.

This ensures that, even if I move or resize the window, the mouse can click at the same position with the same code.

Here is an example to showcase this with the mouse click code wrapper:

def click(self, x, y, duration=0.1):

pyautogui.click(

(x * self.amongus.width) + self.amongus.left,

(y * self.amongus.height) + self.amongus.top,

duration=duration

)

By multiplying the relative x coordinate with the width and adding the left offset of the window, I get the absolute pixel coordinate for the x axis and the same can be done on the y axis.

Comparing the colors

Next, I had to write code to get the color at the relative position and check it against a provided color with some tolerance.

def getColor(self, x, y):

im = pyscreeze.screenshot()

return im.getpixel((

int((x * self.amongus.width) + self.amongus.left),

int((y * self.amongus.height) + self.amongus.top))

)

def checkColor(self, x, y, color, tolerance=15):

return self.tolerance(color, self.getColor(x, y), tolerance=tolerance)

def tolerance(self, color, scolor, tolerance=15):

r, g, b = color[:3]

exR, exG, exB = scolor

return (abs(r - exR) <= tolerance) and (abs(g - exG) <= tolerance) and (abs(b - exB) <= tolerance)

With this helper done, I created a loop that checks whether I have control and alt pressed. If so, it runs the following code which displays the relative x and y coordinate and the color at that coordinate:

if keyboard.is_pressed("ctrl") and keyboard.is_pressed("alt"):

try:

point = self.position()

im = pyscreeze.screenshot()

print(point)

print(im.getpixel((pyautogui.position().x, pyautogui.position().y)))

except IndexError:

print()

pyautogui.sleep(2)

This is a helper function so I can find the points and colors to trigger a task automatically. This means the task can be run without me doing anything other than opening the task.

Card swipe task

With this, I started with the card swipe task, because it annoyed me the most.

if self.checkColor(x=0.7019628099173554, y=0.31724754244861486, color=(0, 99, 71)) and \

self.checkColor(x=0.6797520661157025, y=0.8543342269883825, color=(71, 147, 52)) and \

self.checkColor(x=0.5981404958677686, y=0.8945487042001787, color=(233, 121, 52)):

print("Card")

self.moveTo(x=0.428202479338843, y=0.7444146559428061, duration=0.3)

self.click(x=0.428202479338843, y=0.7444146559428061)

self.moveTo(x=0.3114669421487603, y=0.42359249329758714, duration=0.3)

self.dragTo(x=0.7902892561983471, y=0.46291331546023234, duration=0.6)

While in my loop, I check for the above condition, which, if they are true, means the card swipe is open.

Then, I can move the mouse to the card, click there and move the card along the swiping area at the right speed.

Using this method, I was able to automate almost all of the non-moving tasks.

Solving the “Unlock Manifolds” task

But when I looked at the “Unlock Manifolds” task, I was slightly stumped. You have to click the numbers from 1-10 in order and their position is always randomized.

The first idea was to potentially use OCR to find out the order of the numbers.

I downloaded and installed Tesseract a OCR library and their python wrapper. Even after trying to optimize the image by converting the single numbers into black and white pictures, it didn’t recognize the numbers.

img = self.screenshot(0.303202479338843 + i * 0.07902892561,

0.3735478105451296 + j * 0.13717605004, 0.07902892561,

0.13717605004)

img = ImageOps.grayscale(img)

img = ImageOps.solarize(img, 1)

img = ImageOps.invert(img)

img = ImageOps.autocontrast(img, 10)

img = ImageOps.crop(img, 10)

threshold = 190

img = img.point(lambda p: p > threshold and 255)

if img.mode != "RGB":

img = img.convert("RGB")

In the documentation, there was a notice that, for handwritten things, you could retrain the neural network to recognize it better.

But I had no idea how to do that and it was 2am by this time.

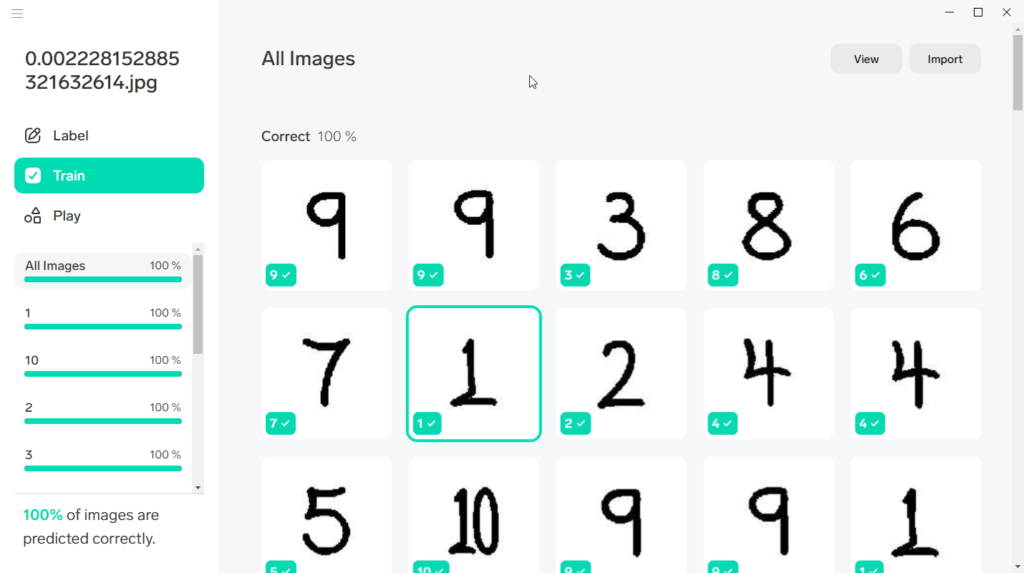

Then I remembered that I had a program called Lobe installed, which provided an easy interface to train a machine learning model.

Using lobe to solve the task

I exported some samples for the different numbers and imported and labelled them inside lobe.

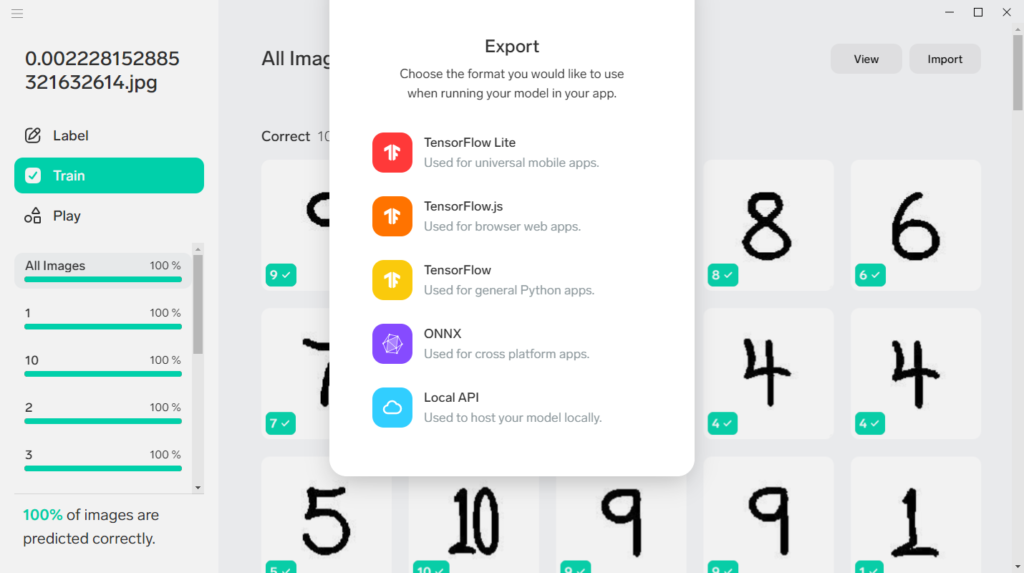

It automatically trained them for me, and I could export my model as a Python Tensorflow Project.

Now, you have to know it was 2am and, with this app, I was able to train a machine learning model even whilst half asleep.

The export creates a folder and a class which you can import inside your own project. So, I was able to simply create a new instance of the model and provide the image and then I got back the prediction.

from Model import Model

model = Model()

model.load()

# ... Generate Image

outputs = model.predict(img)

list.append(int(outputs['Prediction']))

With this task working I was finished, and I got some sleep.

Final results

Here is the final output of my evening of trying to automate as many tasks as possible in Among Us:

| Name | Duration | Automated |

|---|---|---|

| Align Engine Output | Long | No |

| Calibrate Distributor | Short | No |

| Chart Course | Short | No |

| Clean O2 Filter | Short | No |

| Clear Asteroids | Long, visual | No |

| Divert Power | Short | No |

| Empty Chute | Long, visual | Yes |

| Empty Garbage | Long, visual | Yes |

| Fix Wiring | Common | Yes |

| Fuel Engines | Long | Yes |

| Inspect Sample | Long | No |

| Prime Shields | Short, visual | Yes |

| Stabilize Steering | Short | Yes |

| Start Reactor | Long | Yes |

| Submit Scan | Long, visual | Yes |

| Swipe Card | Common | Yes |

| Unlock Manifolds | Short | Yes |

| Upload Data | Short | Yes |

| Automated Tasks | 61% |

I’m sure you can automate even more of them – I was close to automating the “Align Engine Output” and “Calibrate Distributor” – and maybe I’ll get to them when I have more time.

The most difficult ones are those where things are moving or too dynamic like the “Chart Course”, “Clean O2 Filter”, “Clear Asteroid” ones. In those cases, I’m always too slow to click and would have to add some kind of predictability, so it’s faster to do this yourself at the moment.

Video of me using my tool

Here is a video showcasing what happens when you try to do all of the tasks. It skips the non-working tasks and has been sped-up during the waiting and walking times.

I’m pretty happy with the results of this fun little project.

I won’t be publishing the source code, as I don’t want people using this without also sacrificing some sweat and tears to generate the code.

If someone is interested and can show me that they wouldn’t use it for ‘evil’ you can, of course, contact me.